The passive chatbot is dead. Well, not dead, but definitely obsolete. For three years, the world treated Artificial Intelligence like a very smart, very expensive encyclopedia—we asked, it answered, and then it sat there waiting for the next command. That dynamic is over. Enter Agentic AI.

This isn’t just a smarter version of ChatGPT. It’s a fundamental structural change. While a standard Large Language Model (LLM) can write a convincing email, an agent can write it, look up the recipient’s address in Salesforce, click send, and schedule a follow-up meeting based on the reply. It doesn’t just talk; it does.

Major players—Microsoft, Google, OpenAI—are betting the farm on this in 2025. Why? Because the “chat” interface hit a productivity ceiling. Agents smash through it.

What Is Agentic AI?

Think of a standard LLM as a brain in a jar. It has immense reasoning power, but it’s disconnected. Agentic AI gives that brain hands.

It hooks the model up to external tools: web browsers, file systems, and enterprise software APIs. When you give an agent a goal—say, “plan a Tokyo itinerary under $2,000″—it doesn’t hallucinate a list of text. It acts. It breaks the goal into sub-tasks. It checks live flight databases. It compares hotel rates. It verifies availability. If a flight is sold out, the agent doesn’t crash; it self-corrects and finds another route.

The Core Architecture

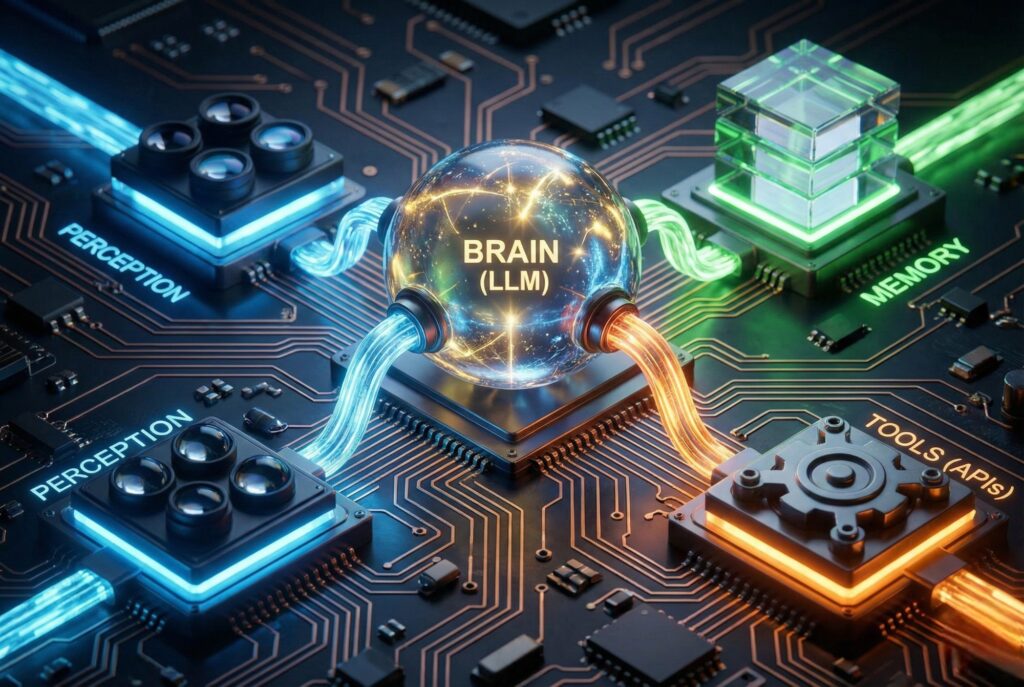

It’s not magic; it’s engineering. Computer scientists break the architecture down into four non-negotiable pillars:

- Perception: The ability to “read” the digital room—parsing a screen or scraping a database.

- Brain (Reasoning): The LLM that formulates the plan.

- Memory: A storage log. Without this, the agent forgets step one by the time it reaches step three.

- Tools: The software bridges (APIs) that let the AI actually click buttons or run code.

Generative vs. Agentic: The Difference

Don’t confuse the content boom of 2023 with the action wave of 2026. Generative AI makes stuff. Agentic AI gets work done.

| Feature | Generative AI (Chatbots) | Agentic AI (Autonomous Agents) |

|---|---|---|

| Primary Function | Content generation (text, images, code) | Task execution and goal achievement |

| Interaction Style | Passive (Wait for prompt, give response) | Proactive (Receive goal, loop until done) |

| Tool Use | Limited (mostly retrieval or simple code) | Extensive (Web browsing, API calls, file management) |

| Autonomy | Low (Requires human to guide every step) | High (Self-corrects and plans independently) |

| State Awareness | Stateless (Forgets context after chat closes) | Persistent (Maintains memory of long-term goals) |

The Architecture of Autonomy

The shift from chatbot to agent relies on a “cognitive loop.” Standard AI is linear: Input $\rightarrow$ Output. Agents are circular. They talk to themselves.

Consider a software engineering agent tasked with fixing a bug. The workflow looks like this:

- Plan: The agent reads the ticket. It decides which files to inspect.

- Act: It uses a tool to open the code.

- Observe: It spots the syntax error.

- Reason: It drafts a fix.

- Test: It runs the code. Crucially, if the test fails, the agent remembers the failure, alters the plan, and tries again.

This loop—Plan, Act, Observe, Reason—spins until the goal is met or the agent realizes it’s stuck.

Real-World Applications

We are done with theoretical demos. In 2025, this tech is already clocking in for work.

Software Engineering

Cognition’s Devin proved that AI could handle entire development tickets, not just snippets. These agents set up their own environments. They install dependencies. They debug their own typos. This forces human developers out of the weeds and into system architecture roles.

Enterprise Automation

Supply chains are messy; agents thrive on the mess. An agent monitors inventory 24/7. If a shipment gets stuck in a blizzard, the agent identifies the delay, searches for alternative suppliers, calculates the cost variance, and presents the human manager with three distinct options. No panic, just data.

The Challenges: Safety and Costs

It’s not all upside. Giving an AI autonomy introduces specific, dangerous risks that passive chatbots never had.

The Infinite Loop: Agents get confused. An agent trying to book a flight might hit a “server busy” error and retry ten thousand times in a minute, accidentally launching a DDoS attack on the vendor or burning through thousands of dollars in compute credits.

Cost and Latency: Agents are token-hungry. A single user request might trigger 500 internal “thoughts” and actions. That makes agentic workflows significantly slower and more expensive than a simple chat query.

Security: You gave the AI “hands.” Now it can delete files. It can send Venmo payments. Organizations are scrambling to build “identity management” for AI employees, strictly limiting what these digital workers can touch.

The Future of Work

The evolution from chatbots to autonomous workers means humans are stopping to be creators and starting to be managers.

The role of the human is no longer to write the code or draft the report. The human role is to define the goal, review the agent’s plan, and sign off on the result. We are entering the age of the AI Coworker. These systems handle the execution; we provide the strategy. In 2026, productivity isn’t about how fast you type. It’s about how well you orchestrate your digital staff.