The European Union’s AI Act isn’t just coming. It’s here. The world’s first comprehensive legal framework for artificial intelligence has entered into force, replacing years of speculation with hard compliance deadlines. For developers and C-suite executives, the days of theoretical preparation are over. Now, you need to know exactly where you stand.

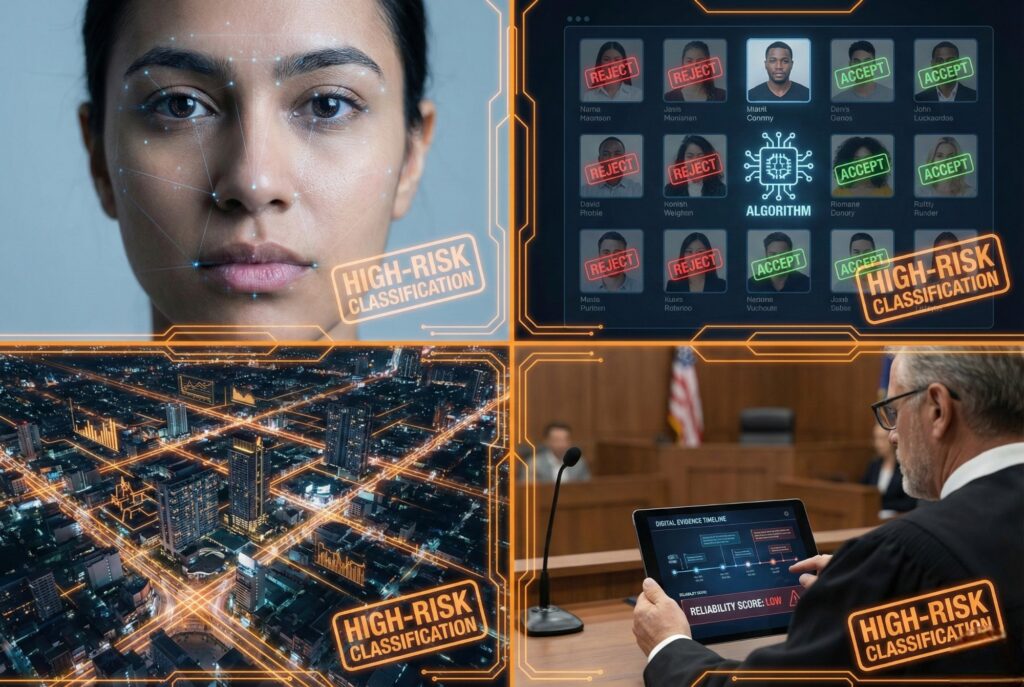

The Act doesn’t treat all code equally. It enforces a rigid, tiered system based on potential harm. Understanding this hierarchy—specifically the nuanced “High-Risk” designation—is the difference between a compliant product and a massive fine. The European Commission defines four risk categories: Unacceptable, High, Limited, and Minimal. Below, we dismantle these definitions to show you exactly what falls into the “High-Risk” trap and how the new Article 6 guidelines might offer a way out.

1. Unacceptable Risk: The Prohibited List

This is the hard “no.” The EU has drawn a red line around systems deemed a direct threat to fundamental rights. These aren’t just regulated; they are banned.

If your model manipulates human behavior using subliminal techniques or exploits vulnerabilities based on age or disability, it’s out. The ban is specific and severe. It covers social scoring systems by public authorities and biometric categorization that infers sensitive data—think political leanings, religious beliefs, or sexual orientation.

Most critical for the private sector is the prohibition on scrapping facial images from the internet or CCTV footage to build recognition databases. If your business model relies on a Clearview AI-style approach, you are now operating illegally in the EU. While narrow exceptions exist for law enforcement (terrorism, kidnapping), for everyone else, the door is shut.

2. High-Risk AI: The Compliance Minefield

This is where the real work begins. “High-Risk” systems are legal, but the barrier to entry is steep. You cannot launch without rigorous conformity assessments, high-quality data governance, and human oversight.

The Act categorizes “High-Risk” through two distinct pathways.

Pathway A: Safety Components

Is your AI the safety valve in a product that’s already regulated? Then it’s High-Risk. If the AI controls the scalpel in a surgical robot, the brakes in a car, or the safety shut-off in industrial machinery, it inherits the risk of the hardware it controls.

Pathway B: Standalone Systems (Annex III)

This is the category that catches most SaaS providers off guard. Your system is High-Risk if it operates in one of eight sensitive areas listed in Annex III. These include:

- Biometrics: Remote identification (non-real-time) and emotion recognition systems.

- Critical Infrastructure: Managing traffic, water, gas, or electricity.

- Education: Algorithms that assign school spots, grade exams, or detect cheating.

- Employment: The software HR uses to screen resumes or monitor performance.

- Essential Services: Credit scoring (excluding fraud detection) and life/health insurance risk assessment.

- Law Enforcement & Justice: Recidivism prediction tools or evidence reliability assessments.

- Migration: Visa screening and polygraphs.

The “Article 6” Escape Hatch: Being on the Annex III list isn’t always a death sentence. The Act includes a filter. If your AI performs a purely procedural task, merely improves a human workflow, or detects patterns without making the final call, you might be exempt. But be warned: you must document this self-assessment perfectly. If you get it wrong, you’re liable.

3. Limited Risk: The Transparency Rule

Here, the danger isn’t physical harm; it’s deception. The EU wants users to know when they are talking to a machine.

This tier captures chatbots, emotion recognition systems (outside prohibited contexts), and deepfakes. The obligation is singular: disclosure. If a user interacts with your customer service bot, the bot must identify itself. If you publish AI-generated video or audio, it must be labeled and machine-readable.

It’s a lighter burden, but mandatory. Hiding the “AI” label is now a breach of law.

4. Minimal Risk: The Innovation Safe Zone

Take a breath. This is where most of the market lives.

Spam filters. Video games. Inventory trackers. If your AI creates 3D assets for a game or recommends a movie on a streaming service, it is likely “Minimal Risk.” The Act imposes no new legal obligations here. While the Commission encourages voluntary codes of conduct, there is no penalty for ignoring them. This “safe zone” ensures that low-stakes code doesn’t get strangled by red tape.

Snapshot: Risk Categories vs. Obligations

The table below cuts through the noise. Find your category, check your deadline.

| Feature | Unacceptable Risk | High-Risk | Limited Risk | Minimal Risk |

|---|---|---|---|---|

| Status | Banned | Regulated | Regulated (Light) | Unregulated |

| Main Burden | Market removal | Conformity assessment, risk management, EU registration | Transparency (labelling) | None |

| Examples | Social scoring, facial scraping, behavioral manipulation | CV screening, credit scoring, medical AI | Chatbots, Deepfakes | Spam filters, Games |

| Deadline | Feb 2, 2025 | Aug 2, 2026 (Annex III) | Aug 2, 2026 | N/A |

| Max Penalty | €35M or 7% turnover | €15M or 3% turnover | €7.5M or 1.5% turnover | N/A |

The Audit: What You Must Do Now

The law is inked. The prohibitions are active. For High-Risk systems, the major deadline is August 2, 2026.

That might sound far off. It isn’t. Start your audit today. Do not assume your HR tool or credit assessment algorithm is “Minimal Risk” just because it’s B2B software. If it touches the categories in Annex III, assume you are in the crosshairs.

Watch for the Commission’s upcoming guidelines on Article 6 derogations. These will be the litmus test for opting out of the “High-Risk” designation. Until then, treat every sensitive system as a liability.