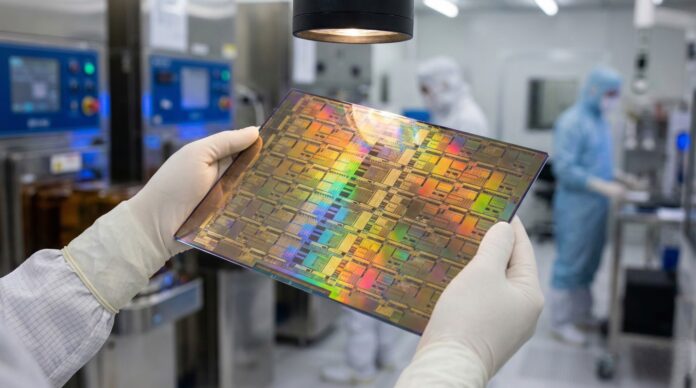

Chip manufacturing follows a strict rule. You take a silicon wafer, and you slice it into hundreds of tiny, individual squares. Cerebras Systems ignored that. They kept the wafer whole. The result is a chip the size of a dinner plate—a “wafer-scale” engine that keeps all computation and memory on a single, massive slab of silicon.

This approach—currently embodied in the Cerebras Wafer Scale Engine 3 (WSE-3)—is a direct response to the biggest bottleneck in artificial intelligence: moving data. Traditional clusters rely on miles of copper and optics to connect thousands of GPUs. Cerebras eliminates the wires. By keeping the entire system on one substrate, they offer bandwidth figures that physically cannot be matched by networked devices. But this engineering marvel fights physics at every turn, facing cooling, power, and manufacturing hurdles that usually make such ideas impossible to build.

The Interconnect Bottleneck

Why build a chip the size of a pizza? Because moving data is slow. In a standard data center, training a Large Language Model (LLM) like GPT-4 demands thousands of NVIDIA H100 or B200 GPUs. These chips have to talk to each other to coordinate.

This chatter happens over cables. Compared to the lightning speed of electrons switching inside a processor, sending data down a wire to another server is agonizingly slow. It burns power. It creates lag. This is the “memory wall”—processors idling, waiting for data that’s stuck in traffic.

Wafer-scale integration deletes the “off-chip” penalty. The memory and the compute cores sit millimeters apart on the same un-cut silicon. Data travels at the speed of the circuit, not the speed of the cable.

The Tech: How WSE-3 Works

TSMC builds the WSE-3 on a 5nm process, but the similarities to standard tech end there. A normal chip covers maybe 800 square millimeters. The WSE-3 covers over 46,000.

Solving the Yield Problem

Manufacturers dice wafers because silicon is imperfect. Dust. Microscopic flaws. If a defect hits a small chip, you trash it. No big deal. But if you try to use the whole wafer, one speck of dust theoretically ruins the entire multi-million dollar device. That’s the yield trap.

Cerebras sidesteps this with brute-force redundancy. They print slightly more cores than they need. If a manufacturing defect kills a specific section, the hardware automatically reroutes signals around the dead zone. The system heals itself, allowing them to ship a “perfect” wafer even if the silicon has physical flaws.

Comparison: WSE-3 vs. NVIDIA H100

The table below highlights the sheer scale difference. Note that a single WSE-3 is designed to replace a cluster of GPUs, not a single card.

| Feature | NVIDIA H100 (Hopper) | Cerebras WSE-3 | Difference Factor |

|---|---|---|---|

| Physical Area | 814 mm² | 46,225 mm² | ~57x Larger |

| Transistors | 80 Billion | 4 Trillion | 50x More |

| AI Cores | ~17,000 (CUDA/Tensor) | 900,000 | 52x More |

| On-Chip Memory | 50MB L2 Cache | 44 GB SRAM | 880x More |

| Memory Bandwidth | 3.35 TB/s (HBM3) | 21,000 TB/s (21 PB/s) | ~6,200x Faster |

| Power Draw | ~700W | ~23,000W | High Density |

The Reality of the “Hype”

The specs look alien. But specs aren’t everything. Integrating this hardware is a logistical nightmare compared to slotting in a standard server blade.

The Advantages

1. The Firehose: 21 PB/s bandwidth. That is the metric that matters. It feeds cores instantly. For “inference”—actually running the AI to generate text—this speed is lethal. Cerebras has demonstrated Llama-3 running at speeds GPU clusters struggle to match.

2. Coding, Simplified: Coding for 1,000 GPUs is hard. You have to split the model, manage the timing, and pray the interconnect holds up. The WSE-3 looks like one big chip to the software. No complex sharding required.

The Challenges

1. The Heat: You don’t plug this into a wall outlet. The chip pulls 23 kilowatts. That’s a rack’s worth of power concentrated into a single square. Air cooling is impossible. It needs a custom chassis—the CS-3—pumping water directly behind the silicon to keep it from frying.

2. The Fit: Data centers like 19-inch racks. They like standard voltages. Cerebras demands custom plumbing and heavy-duty power delivery. It’s not a drop-in replacement.

3. Memory Capacity: 44GB of SRAM is fast, but it’s small. GPU clusters have terabytes of HBM. For the truly massive models, you still need to link multiple wafers together, which reintroduces the very interconnect problems Cerebras tried to solve—just at a different scale.

Market Impact and Future Outlook

Wafer-scale isn’t just a bigger chip; it’s an attack on the status quo. NVIDIA makes fast chips and sells expensive cables to connect them. Cerebras argues the cable is the enemy.

For now, these engines are likely to remain specialized tools. They belong in supercomputing centers and major AI labs where training speed justifies the headache of custom infrastructure.

The industry is watching. If Cerebras proves that wafer-scale manufacturing works consistently, giants like TSMC and Intel might have to rethink the physical limits of the silicon chip. We might be done thinking small.