The Invisible Workflow

It happens on a Tuesday afternoon in Chicago. A marketing copywriter, stalled by corporate red tape, pastes a confidential product launch strategy into a personal generative AI account. The tool spits out a campaign outline instantly. Friction removed. Deadline met. But in that brief, productive transaction, proprietary intellectual property left the safety of the enterprise firewall. It may now be training data for a public model that a competitor could query tomorrow.

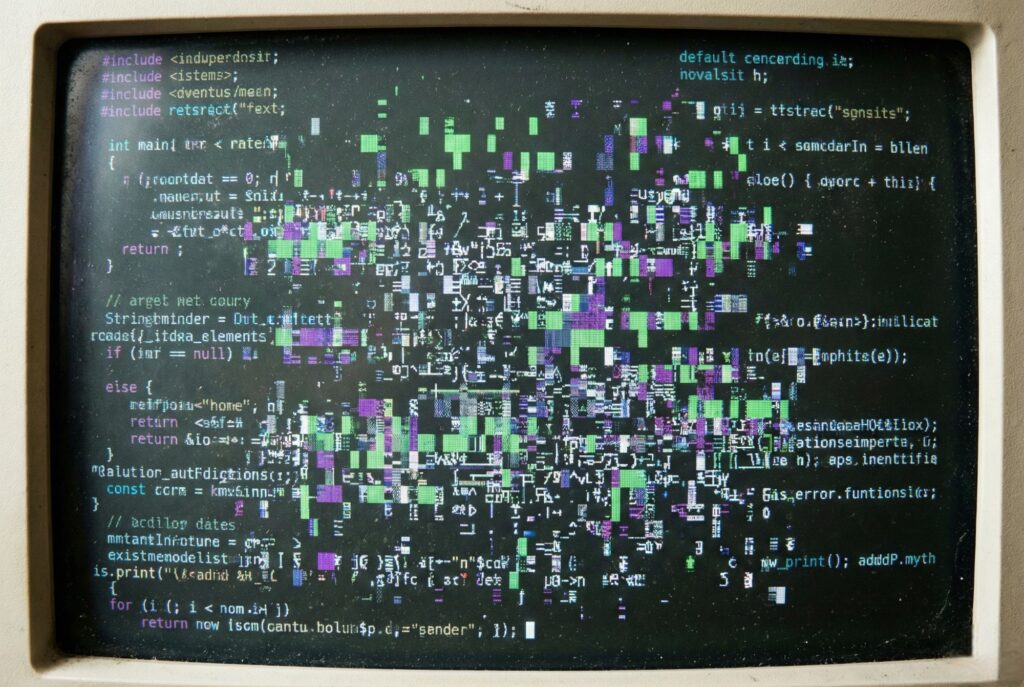

This is “Shadow AI.” It is currently the most pervasive, undocumented risk in the modern enterprise.

Unlike its predecessor, Shadow IT—which usually involved unauthorized software installations—Shadow AI is elusive. It lives in the browser. It is invisible to traditional network monitoring. And it is driven not by malice, but by employees desperate for speed. A 2025 report by Cyberhaven notes that usage of these tools has grown 61x over the last two years. Even more telling? Mid-level employees adopt them at rates 3.5 times higher than their managers. The perimeter hasn’t just been breached. It has been dissolved.

Defining the Threat Surface

Shadow AI is the unsanctioned use of artificial intelligence tools, models, or APIs. The intent is almost always benign—automating code, summarizing messy meeting notes, drafting emails. The consequences, however, are severe.

The danger isn’t just leakage; it’s the “learning” mechanism of Large Language Models (LLMs). When an employee feeds sensitive data—customer PII, source code, financial projections—into a public tool, that data can become part of the model’s permanent training set. There is no “un-read” button for a neural network. Once the pattern is absorbed, it’s there.

Gartner predicts that by 2030, 40% of enterprises will experience a security or compliance incident directly linked to Shadow AI. This isn’t hypothetical. It is the mathematical inevitability of undocumented data flow.

The Scale of the Problem

This adoption curve is unlike any software trend in history. Why? Because the barrier to entry is zero.

No installation. No corporate credit card. No admin rights.

A 2025 analysis by Netskope revealed that 47% of generative AI users in the enterprise rely on personal accounts rather than corporate-sanctioned workspaces. This creates a bifurcation: two distinct realities within a company. You have the “official” workflow—slow, compliant, monitored. Then you have the “shadow” workflow—fast, agile, and completely opaque.

Security leaders are currently fighting a visibility war. Traditional Data Loss Prevention (DLP) tools trigger on file uploads or USB transfers. They rarely parse the semantic context of a text block pasted into a browser prompt.

Sanctioned vs. Shadow: A Critical Comparison

To understand the gap security teams must bridge, we have to look at the architecture. Here is the difference between the tools you approved and the tools your teams are actually using.

| Feature | Sanctioned Enterprise AI | Shadow AI (Public/Personal) |

|---|---|---|

| Data Retention | Zero-retention (discarded after session) | Indefinite (often used for training) |

| Identity Management | SSO / MFA integrated | Personal email / weak authentication |

| Auditability | Full logging of prompts and outputs | Black box; no centralized logs |

| Compliance | SOC2 HIPAA GDPR aligned | User assumes all liability |

| Model Access | Version-controlled, static models | Dynamic updates (behavior changes daily) |

The “AI Technical Debt” Crisis

Leakage is the acute symptom. “AI Technical Debt” is the chronic disease.

When developers use unauthorized AI coding assistants to generate scripts, or analysts use them to build financial models, the organization inherits code it did not author. It cannot fully explain it.

If a shadow tool generates a SQL query deployed into production, and that tool is later updated or taken offline, the enterprise is left maintaining an artifact with no documentation. The provenance of the logic is lost. This erodes “institutional memory.” When systems break, engineers can usually trace the human logic behind the code. With Shadow AI-generated assets, the logic is often a stochastic guess by a model that no longer exists in that specific form.

Strategic Mitigation: Governance Over Blocking

The instinctive reaction for many CISOs is to block the domains. History suggests this is a failure mode.

Blocking OpenAI, Anthropic, or Perplexity creates a game of whack-a-mole you will lose. It simply drives users to obscure, less secure alternatives. A smarter strategy involves “racking and stacking” the risk:

- Discovery: Use detailed browser telemetry. Find out what tools are actually running.

- Triage: Bucket the tools. “Sanctioned.” “Tolerated.” “Blocked.”

- Substitution: If employees use a shadow tool for coding, the organization must immediately provide an enterprise-grade alternative—like GitHub Copilot Enterprise—that solves the problem without exposing the IP.

Leading organizations are now implementing “AI Bill of Materials” (AI BOM) protocols. Manufacturing tracks every screw and bolt; software teams must now track which AI models touched which parts of the codebase.

The Path Forward

Shadow AI is not a malware infection to be cured. It is a market signal.

It tells you exactly where corporate tools are failing to meet the speed requirements of the workforce. The goal of enterprise security in 2026 is not to eliminate Shadow AI. It is to bring it into the light. Convert it from an unknown risk into a managed asset.

Shift from prohibition to observation. Harness the velocity of AI without sacrificing data integrity. The future belongs to companies that can institutionalize the innovation currently happening in the dark.